AI and This Book

Kainan Jarrette and Diana Daly

AI and This Book

AI can be an incredibly helpful tool, but it’s important to be transparent about its use. When developing this book, we used AI (mostly ChatGPT and occasionally Google Gemini) for support in various areas:

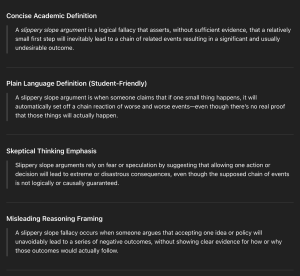

Help with Wording for Definitions

How terminology is defined is very important, particularly when first introducing that terminology to readers. You don’t want something overly complicated or detailed, but you also don’t want something that’s oversimplified.

Typically, we would ask AI to generate several different definitions of a term, each of varying complexity or aimed at different audiences. We would then take what we liked from those options to create a “hybrid” definition that we felt was most appropriate for this book.

Help Creating Images

Neither of the authors of this book have particularly good or fast illustration skills, nor did we have enough funding to hire a professional illustrator. As such, we used generative AI to help us create images.

From previous work, we knew that it’s both quicker and more fun to not hand everything over to AI. Prompts were often pretty specific in terms of content, framing, etc, as opposed to overly broad. We’ll give an example below.

An overly broad prompt would look like:

Generate a cartoon showing a slippery slope argument.

Which created this image:

If anyone can figure out what’s actually happening here, please let us know!

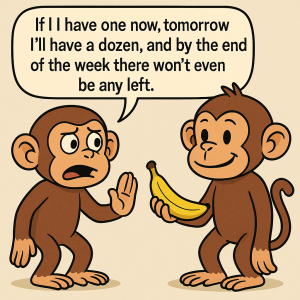

A more specific prompt (that we used for an image in this book) would be:

Make me an image of:

– Two cartoon monkeys

– One monkey is on the right side of the frame and is holding out (offering) a banana to the second monkey who is on the left side of the frame

– The second monkey (the one on the left side of the frame) is holding its hand out to decline the banana

– The second monkey (the one on the left side of the frame) is also saying “If I have one now, tomorrow I’ll have a dozen, and by the end of the week there won’t even be any left.”

Which created this image:

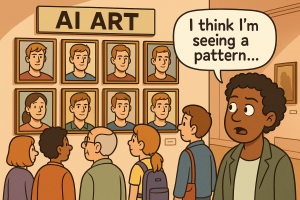

An Example of AI Bias

When it came to images involving people, we would usually try to include in the prompt that the people should be a random gender and ethnicity (and, in some cases, a random age). This was due to our quick realization that AI has a natural tendency to create its human characters as young white men.

While certainly not perfect, asking it to randomize gender and ethnicity hopefully helped to create at least slightly more diversity and representation among the images.

Help Brainstorming Examples

This is mostly referring to the Logical Fallacies section of this book. We would ask AI to provide us with a lists of examples of whatever fallacy we were covering, often generating between 10-20 examples. These would typically be broken down into “general” examples and “context specific” examples (meaning situations readers would be more likely to encounter in their own lives).

These examples were used to help create both cartoon images found in the chapters, as well as questions in the various Knowledge Checks.

It was rare that we would directly use one of those examples, but it was helpful to provide some structure for our own creativity. For instance, the above example of a slippery slope using monkeys came about as an adaptation of an example AI gave us of a human character rejecting cookies.

Help With Visual Theme Decisions

It was important to us that the book not simply be aesthetically pleasing to our personal tastes, but have aesthetics that helped match best design and learning practices. To this end, we asked AI to help identify best practices for things like what fonts we used and what color schemes we used.

AI Think You Should Try Again

While there are definitely impressive aspects of how AI can generate art, it’s far from actually replicating a real human artist. Below are some fun examples of early or unused drafts of images for this book, all highlighting some issues with generating AI art.

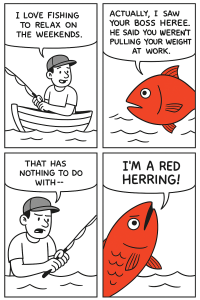

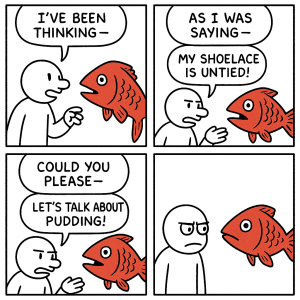

Close, but No Red Herring

As discussed above, overly broad prompts leave too much to an AI’s “imagination”… and its imagination isn’t very good, nor is it very coherent. These comics were based on an overly broad prompt looking to generate a comic humorously showing a red herring argument.

What’s interesting to note is how the AI seems to understand the general structure it’s going for, but is seemingly lost on how to fill that structure in with appropriate content.

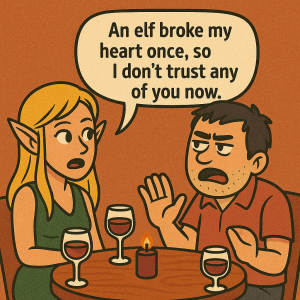

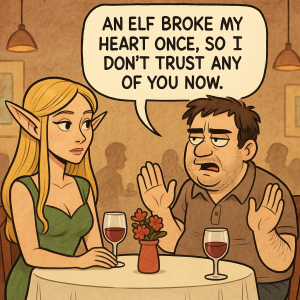

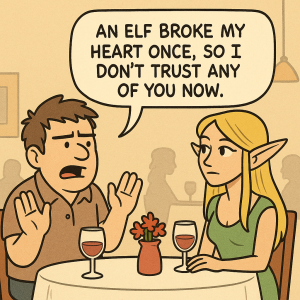

Not Too Cold, Not Too Hot, Just Right

Other times, AI can get the basics pretty well, but needs some fine tuning. Below shows some stages of a cartoon we ended up using:

Extra Appendages and Missing Windows

Sometimes, even when you have more specific prompts, AI can still make mistakes that it’s unlikely a human would make. For instance, adding extra appendages (like tails), or forgetting that cars have windshields.

Media Attributions

- AI Definitions Example Screenshot

- Slippery Slope and a Baby © ChatGPT is licensed under a CC0 (Creative Commons Zero) license

- No Bananas Please © ChatGPT is licensed under a CC0 (Creative Commons Zero) license

- AI Art Bias © ChatGPT is licensed under a CC0 (Creative Commons Zero) license

- AI Fallacy Examples Screenshot

- AI Mish Mash © ChatGPT is licensed under a CC0 (Creative Commons Zero) license

- Fishing Fun with a Twist is licensed under a CC0 (Creative Commons Zero) license

- Red Herring Blooper 02 is licensed under a CC0 (Creative Commons Zero) license

- Red Herring Interruptions is licensed under a CC0 (Creative Commons Zero) license

- Date Blooper 01 is licensed under a CC0 (Creative Commons Zero) license

- Date Blooper 02 is licensed under a CC0 (Creative Commons Zero) license

- Date Blooper 03 is licensed under a CC0 (Creative Commons Zero) license

- AI Blooper 02 is licensed under a CC0 (Creative Commons Zero) license

- AI Blooper 03 is licensed under a CC0 (Creative Commons Zero) license