Bot Detection

Kainan Jarrette and Nina Kotova

Bot Detection

Learning Objectives

- Understand what a bot is and how it relates to misinformation/disinformation

- Learn strategies to identify bots

Introduction

Bots are software applications or scripts that automatically perform tasks on the web. Not all of these tasks are necessarily harmful, which is by bots are typically identified as either innocuous or malicious.

Innocuous bots are bots that are designed to do relatively benign tasks like automatically reply or send out emails, operate simple feeds, and so on. While they can still potentially be tricked into spreading misinformation, that was never the original goal of their design and creation.

Malicious bots, however, are specifically designed to spread misinformation and manipulate online discourse. This usually involves creating fake accounts, and creating and sharing specifics posts and information. These bots generally try to imitate human behavior and communication on the internet, with varying degrees of success. These are the bots we’re primarily concerned with (so when you see us use the term “bot,” just know we mean “malicious bot”).

Bots tend not to be completely singular agents, but rather one piece of a much larger botnet – or a coordinated network of bots who are all used towards a singular purpose. As you’ll see, most of the malicious activity of bots isn’t just about a particular technique, but about using that technique at a large volume. Although there is a whole process by which bots are created (Jena, 2023), often by hijacking other people’s computers, here we’re more interested in how they behave, and how to spot them.

Activity and Detection

Section 1.1: Typical Malicious Activity

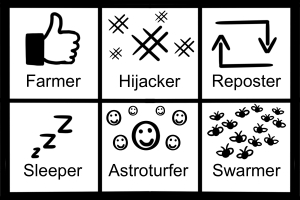

So what does a bot typically do that’s so bad? Well, there are some common attack behaviors, or methods (CISA, 2021) in which social media bots influence and/or engage with humans online:

- Farming Clicks/Likes: bots can be used to increase an account’s popularity by liking or reposting its content.

- Hashtag Hijacking: when a specific audience is using a particular hashtag, bots can use that same hashtag to attack that audience.

- Repost Storming: when a parent bot creates a new post, child bots will all immediately repost it.

- Sleeping: sleeper bots go inactive for an extended length of time before suddenly become active, flooding the platform with numerous posts or retweets in a short period.

- Astroturfing: when bots share coordinated content to give the false impression of a “grassroots” movement.

- Swarming: when bots overwhelm targeted accounts with spam, usually with the goal of shutting down opposing voices.

Section 1.2: Detection Methods

We may know what the typical behaviors of bots are, but we’re quickly confronted with a new issue: these could also be the behaviors of an actual person. As a result, a variety of Bot Detection Methods (Radware, 2024) have been developed to help identify if a user/account is an actual human:

- Human interaction challenges: having the user pass some form of simple interactive puzzle to “prove” it isn’t a bot. Arguably the most well-known of these puzzles are CAPTCHAs.

- IP checks: checking the IP addresses of suspicious accounts against those of known bots or botnets.

- User behavior analysis: there are certain behaviors humans have when using technology, such as mouse clicks, scrolling, and touch-pad use, that a bot may struggle to replicate.

- Traffic analysis: directly analyzing site traffic behaviors to spot those more indicative of coordinated bot efforts than normal human interaction.

- Time-based analysis: monitoring the time it takes for a user to complete certain tasks, with the idea that unusually fast completion times may indicate bot activity.

- Crowd-sourcing: relying on other, human users to identify bots on their own

- Machine-learning: using Ai to identify and construct patterns of what “human” and “bot” accounts look and behave like.

Section 1.3: Challenges and Drawbacks

As you can probably guess from the fact that bots are still currently a very real problem, these detection methods are far from perfect. To start, bots have quickly become more sophisticated (with no signs of slowing down), to the point where they can solve most CAPTCHAs. Mimicking supposedly un-mimickable behavior is turning out to be easier for bots than we originally thought. Analytic methods, meanwhile, can be incredibly expensive even for major companies, let alone smaller startups.

It’s also important to realize that a detection method’s accuracy involves both false-negatives and false-positives. A false-negative would involve a detection method concluding an account isn’t a bot when it actually is. A false-positive would involve a detection method concluding an account is a bot when it actually isn’t. Since the primary goal of most social media companies is user engagement and retention, they care much more about avoiding false-positives than false-negatives. A detection method’s value, in a market sense, is going to be directly related to its rate of false-positives.

Bot Detection Resources

Section 2.1: Red Flags

Fortunately, you can also do your own part in spotting the bots among us! Here are some potential red flags that an account may be a bot. Individually, each of these doesn’t necessarily indicate an account is a bot. But the more of these red flags an account exhibits, the more likely it’s a bot account:

- Profile Image: bots often have a profile image of some type of cartoon, or no profile image at all.

- Username: bots often have nonsensical usernames, or usernames with excessive numbers in them

- Bio: bots often have profiles that contain little personal information, but a lot of divisive rhetoric

- Creation Date: bots were often created (or else came online after a long time) very recently

- Followers: bots often have a suspiciously high number of followers, especially relative to their creation date

- Erratic Behavior: bots often make quick changes in posting habits, including topics of interest and even language

- Hyperactive Behavior: bots often post an excessively large amount of content in a short period of time

- Sharing: bots often mostly or even exclusively share posts from other accounts rather than unique posts they create themselves

Section 2.2: Digital Tools

There have also been various digital tools developed to help people identify potential bot accounts.

- Botometer uses a characteristic matrix of roughly 1200 items to calculate a score indicating how likely it is a specific account is a bot (with the low end indicating “human,” and the high end indicating “bot”).

- Bot Sentinel uses machine learning to study social media accounts and classify their trustworthiness, continuing to monitor those labeled as unreliable.

- Hoaxy helps visualize the spread of claims and posts.

Conclusion

As you’ve seen, bots can work in a variety of ways, most all of which involve coordination, to both create and spread disinformation. As a result, identification techniques have been created and continue to be refined, but the reality is that identifying bots can be difficult. A lot of typical bot behavior is also seen in real life people, and we collectively seem to value and prioritize the idea of not incorrectly removing access to a real person. To this aim, focusing on the unique differences between bot and bot-like human behavior is helpful, as is educating individuals on the typical red flags that an account may be a bot.

Fortunately, in addition to the the moral and ethical reasons behind bot detection, there are also economic ones. There is a constant and growing concern that advertisers are wasting their money (Meaker, 2022) advertising to non-human accounts. Since social media companies primarily rely on their advertising revenue (McFarlane et al, 2022), they have a strong incentive to remove bots from their platform.

While business and government continue to create and negotiate solutions to the problem of bots, don’t forget you as an individual can always be on guard for suspicious behavior in accounts you interact with. If you think you may be interacting with a bot account, ask yourself: do the benefits of what I’m getting from this account outweigh the risks?

Key Terms

the use of bots to make a campaign or movement seem organic; a play on words of the term grassroots campaign, where a movement gains traction organically

methods in which social media bots influence and/or engage with humans online.

software applications or scripts that automatically perform tasks

a network of connected bots

Completely Automated Public Turing test to tell Computers and Humans Apart. These usually take the form of relatively simple puzzles that some bots may struggle to solve.

the practice of using bots to create the appearance of more likes or engagement with a post

the practice of bots using a popular hashtag to spread information unrelated to the original intent of that hashtag

bots that are designed to do relatively benign tasks like automatically reply or send out emails, operate simple feeds, etc

bots specifically designed to spread misinformation and manipulate online discourse

the practice of bots immediately (and in great volume) reposting content from a parent bot

a practice where bots will go dormant for an extended period of time before suddenly becoming intensely active

the practice of bots targeting a specific account with spam content, usually with the goal of shutting them down

References

Alfonsas, J., Kasparas, K., Eimantas, L., Gediminas, M., Donatas, R., & Julius, R. (2022). The Role of AI in the battle against disinformation. NATO Strategic Communications Centre of Excellence. https://stratcomcoe.org/publications/the-role-of-ai-in-the-battle-against-disinformation/238

CISA. (2021). Social Media Bots. https://www.cisa.gov/sites/default/files/publications/social_media_bots_infographic_set_508.pdf

Ferrara, E. (2023). Social bot detection in the age of ChatGPT: Challenges and opportunities. First Monday.

Jena, B. K. (2023, February 10). What Is a Botnet, Its Architecture and How Does It Work? SimpliLearn. https://www.simplilearn.com/tutorials/cyber-security-tutorial/what-is-a-botnet

McFarlane, G., Catalano, T. J., & Velasquez, V. (2022, December 2). How Facebook (Meta), X Corp (Twitter), Social Media Make Money From You. Investopedia. https://www.investopedia.com/stock-analysis/032114/how-facebook-twitter-social-media-make-money-you-twtr-lnkd-fb-goog.aspx

Meaker, M. (2022, December 2). How Bots Corrupted Advertising. Wired. https://www.wired.com/story/bots-online-advertising/

Mønsted, B., Sapieżyński, P., Ferrara, E., & Lehmann, S. (2017). Evidence of complex contagion of information in social media: An experiment using Twitter bots. PloS One, 12(9), e0184148.

Morstatter, F., Wu, L., Nazer, T. H., Carley, K. M., & Liu, H. (2016). A new approach to bot detection: Striking the balance between precision and recall. 2016 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), 533–540. https://doi.org/10.1109/ASONAM.2016.7752287

Radware. (2024). Bot Detection. https://www.radware.com/cyberpedia/bot-management/bot-detection/

Villasenor, J. (2020, November 23). How to deal with AI-enabled disinformation. Brookings. https://www.brookings.edu/articles/how-to-deal-with-ai-enabled-disinformation/

Media Attributions

- Private: Robot © Cat Elliott is licensed under a CC BY (Attribution) license

- Private: Types of Bots © Cat Elliott is licensed under a CC0 (Creative Commons Zero) license

software applications or scripts that automatically perform defined tasks

bots that are designed to do relatively benign tasks (like automatically reply or send out emails, operate simple feeds, etc)

bots specifically designed to spread misinformation and manipulate online discourse

a network of connected bots, often on computers hijacked through malware

methods in which social media bots influence and/or engage with humans online

the practice of using bots to create artificial engagement (usually through actions such as "liking" a post)

the practice of bots using a popular hashtag to spread information unrelated to the original intent of that hashtag

the practice of bots immediately (and in great volume) reposting content from a parent bot

a practice where bots will go dormant for an extended period of time before suddenly becoming intensely active

the use of bots to make a campaign or movement seem organic when it's not; the name is a play on the term "grassroots," wherein a movement gains traction through natural means of human involvement

the practice of bots targeting a specific account with spam content, usually with the goal of shutting them down

Completely Automated Public Turing test to tell Computers and Humans Apart. These usually take the form of relatively simple puzzles that some bots may struggle to solve.